a Restless wanderer

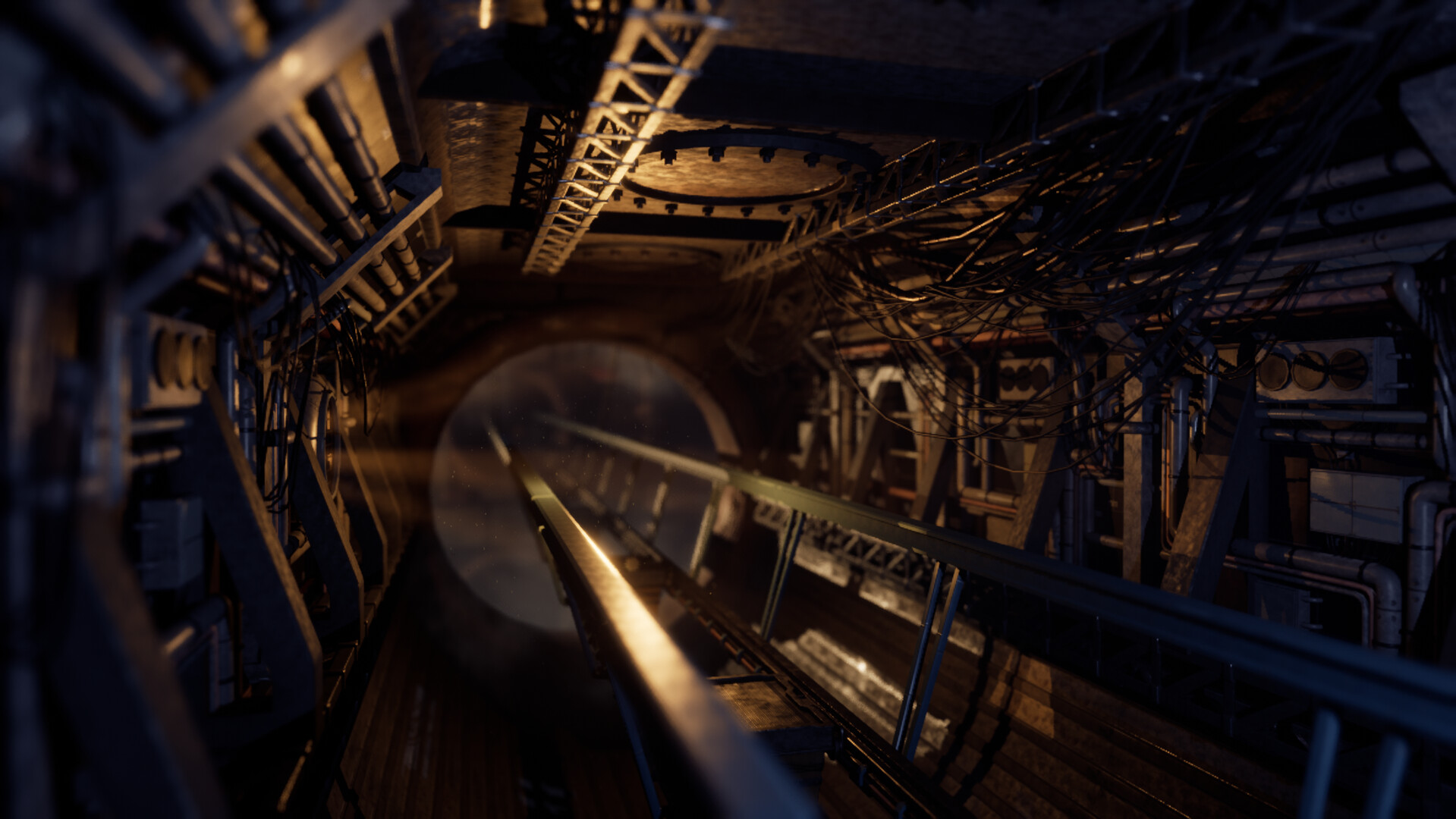

The City of Machines wanders restless through the barren wastes. Here, everything is machines; even the inhabitants. Ever onward, the City chases Optimum: the last, fleeting oasis of time left in the world.However, A New Threat Looms On the Horizon.

a Mechanical Puzzle Adventure

Mechanical Sunset is a mechanical puzzle adventure. In the game, the player will get the chance to explore The Wandering City of Machines; a place its robotic inhabitants have made home. This is a bizarre place where the warm, inviting lights of a cabin mingles with heavy machinery, cascades of sparks, and forests of wire and pipe.

On their way through the metal jungle, the player will have to learn to operate the aging machinery; buttons, levers, gauges and lights guide you as you awaken the slumbering leviathan.

Features

- Navigate through the bizarre and unfamiliar world of the Walking City of Machines, where

mechanical meets countryside comfort. - Encounter obstacles that must be overcome by learning to operate large and unfamiliar machinery.

- Learn to interpret what the machines are telling you by reading indicator lights and gauges;

connected to accurately physics-simulated components. - Manipulate the world around you using old, analogue control panels. Satisfying buttons and

chunky levers are your means to get the old machines running again.- Careful: the inattentive player may end up breaking the machinery and have to rely on

alternative solutions to get what/where they want.

- Careful: the inattentive player may end up breaking the machinery and have to rely on

- Talk to the interesting robotic lifeforms around the City.

- Discover their Wishes, Dreams, and Ideals.

- Help them With their Tasks – Or Don’t.

- Ask them for help with puzzles that you can’t crack on your own.

- Discover the truth behind the temporal apocalypse.

- Time is freezing, and it is up to the player to figure out why, and how to stop it.

This is a game for people who like pressing buttons.

Hi there!

Its been a decent while since we got a devlog out, since weve been kept busy with playtesting, polish, bug fixes, and general business endeavours. A lot of the things that has happened since last deserves dev logs of their own, to be honest but today Im going to talk a little about a subject that is very important to Mechanical Sunset, and really almost any game: dialogue!

It has been clear to us from the beginning that Mechanical Sunset will require a fairly advanced system for handling dialogue. Robots in the City should feel alive and responsive, react to the players actions, offer advice and hints, and generally behave in a [strike]human[/strike]robot-like manner.

--- Author: Fredrik "Olaxan" Lind ---

A lot of people are familiar with the concept of a dialogue tree: a branching set of character replies, and options for the player to choose between. A dialogue tree allows you to offer dialogue conditionally, meaning robots can be programmed to only say certain things based on the state of the current scene. A common example in the MechSun demo is robots commenting on the state of a nearby light the player might have turned it off.

Here is an example of one such tree, as implemented in the current demo.

Users who are familiar with Unreal may cringe and shudder at the sight of this, for reasons Ill soon explain.

This is the logic of TUTIS idle commentary, which appears over his head when the player approaches.

A Selector node will check its children from left to right, continuing with the first one that reports success. It can be used to output dialogue conditionally.

A Sequence node will simply execute its children in order (left to right), aborting if any of them fails.

This simple logic has allowed us a lot of advanced world interactions with relative ease!

For instance, AMSTRAD the robot noticing if the player has turned off his ceiling light, but fixed the power to the area or TUTIS offering what is pretty much a step-by-step solution to the first power puzzle, if prompted.

[hr][/hr]

Fredrik Olaxan Lind

Speech Therapist

A lot of people are familiar with the concept of a dialogue tree: a branching set of character replies, and options for the player to choose between. A dialogue tree allows you to offer dialogue conditionally, meaning robots can be programmed to only say certain things based on the state of the current scene. A common example in the MechSun demo is robots commenting on the state of a nearby light the player might have turned it off.

Here is an example of one such tree, as implemented in the current demo.

Users who are familiar with Unreal may cringe and shudder at the sight of this, for reasons Ill soon explain.

This is the logic of TUTIS idle commentary, which appears over his head when the player approaches.

A Selector node will check its children from left to right, continuing with the first one that reports success. It can be used to output dialogue conditionally.

A Sequence node will simply execute its children in order (left to right), aborting if any of them fails.

This simple logic has allowed us a lot of advanced world interactions with relative ease!

For instance, AMSTRAD the robot noticing if the player has turned off his ceiling light, but fixed the power to the area or TUTIS offering what is pretty much a step-by-step solution to the first power puzzle, if prompted

So whats bad about the above?

Well, Unreal devs will notice that the images depict a Behavior Tree.

Behavior Trees are common in game engines to provide a designer-friendly interface for AI programming.

They are meant to provide an intuitive logic flow for creating agents that can chase the player, investigate noises, mill around randomly the sort of thing were used to from open-world games.

They are NOT meant for dialogue!

There are a few reasons for this, and Ill try to explain a few.

This is a DIALOGUE TREE. It is the result of us porting all dialogue to the frankly excellent dialogue plugin NotYetDialogue for Unreal.

If theres a takeaway to be had from this devlog, its that plugin. Remember the name for whenever you find yourself in a situation that demands any sort of dialogue, because it is excellent.

Now, whats the difference between that graph, and the Behavior Tree?

The main difference is that a Dialogue graph allows for multiple connections to one node. As you can see in the picture above, several dialogue choices feed into the central node, and the dialogue proceeds from there.

That is not allowed in a Behavior Tree. Now, this might seem like a massive downside (and it is!), but using Sequences smartly will allow for a very similar behavior so no deal-breaker yet.

Another difference is that nodes in Behavior Trees are meant to be more or less independent of one-another. This led to challenges for us, where we could not visualise on the dialogue UI which dialogue paths had been taken; which would end the dialogue, etc. There was no real knowledge of what was coming next in the tree.

[hr][/hr]

So obviously Behavior trees are a bad choice for dialogue. Why did we ever go with them to begin with?

The reason are twofold!

1. A very popular tutorial that will come up when you search for these things will lead you down the path to ruin.

2. Its actually quite powerful to have dialogue and AI behavior tightly coupled!

With dialogue baked into the AI behavior tree, we can take full advantage of Unreals AI Tasks, such as moving, animating, and modifying AI state; directly from the dialogue.

This setup, for instance, allowed us to rotate a robot to point at a specific door in the scene, using AI Tasks.

We could have robots being told to walk somewhere, and obey. We could have robots commenting on their own AI actions by having them speak through their ambient dialogue (the widget above their heads).

Now, is this type of behavior impossible with a more loosely coupled dialogue-AI relationship? No, certainly not. It was simply nice while it lasted, and made it more difficult to identify the fact that the whole approach was a fairly bad idea.

[hr][/hr]

Im happy to end this devlog by telling you that transitioning our dialogue to the new system was a neat and quite quick affair, and that the new plugin will allow us MUCH more flexibility going forwards.

I would like to again mention the name NotYet Dialogue (https://gitlab.com/NotYetGames/DlgSystem ), because the plugin deserves the recognition. It is robust against error, flexible, and designed in a manner that fits most games. It is highly recommended.

And if theres a takeaway to be had apart from that, perhaps itd be that even a system that seems good in a lot of regards can be undermined by grievances that are hard to fix, and that one shouldnt be afraid to take a few steps back and recognize problems sooner rather than later. I liked our previous method of doing dialogue, but the new way is simply better in all regards.

A lot having changed under the hood, the UI has received only minor touch-ups.

Well, porting the dialogue may has been painless but also mind-numbingly dull and repetitive, with no less than 34 trees (including ambient commentary) having been migrated manually.

With that out of the way, I am more than happy to say: Me, the Gumlins, and the robots wish you a good weekend!

See you next time!

Hello people! Im sorry for missing last weeks devlog this week I got reminded of it.

Right now I am working on creating destructible meshes with the new Chaos destruction tool that has been added to Unreal Engine 5. The idea behind having destructible meshes in this case is having throwable and fragile items in the world (maybe a coffee mug, or a window) which you can break. It adds a lot of realism and interaction to a scene! It would also be possible to have larger structures crumble for cinematic effect.

--- Author: SImon "Vito" Gustavsson ---

Chaos is so cool and satisfying when you get it to work the keyword here being when.

There are dozens of settings for each component in the item which need to be tweaked in order for it to feel even slightly realistic.

The Chaos destructible meshes are built using something called a geometry collection basically a collection of meshes. You then take a mesh and create a geometry collection from it by fracturing it into smaller pieces.

In order to make the mesh not explode into a million small pieces upon spawning, there are parameters settings for the amount of force a single fragment can withstand before breaking away from the larger mesh. There are also several parameters here for what is called clusters. A cluster is a smaller piece of a fracture fragment, which has its own thresholds for how much punishment it needs to be put under in order to break into even smaller pieces. In theory you can create a mesh that can be granulated an unlimited amount of times but that isnt very good for performance, one might suppose.

Now the fun part, actually destroying them!

To destroy a mesh we need to create some kind of force to make the object buckle under the pressure. There are several types of force you can apply.

One way is to create a force field. At the moment I have only tried a couple of them, but here are two examples:

Video titled Linear Force (explosionesque)

Video titled External Strain

Video titled External Strain continued

The explosion is really cool, but I think the straining is even more impressive! Its so well done, and makes me feel like we have come a long way regarding physics in video games. What impressed me was how the strain could get multiple outcomes depending on how the mesh crumbled under its own weight. Sometimes the middle part got so strained that it collapsed the other way (as seen in the two comparison videos).

I also accidentally scaled it and got some fun weird bugs but it still looks cool, at the very least!

Video showing the weird bug in question

We will see how many of these will find their way into the final game, but at least you can expect some degree of destructibility in Mechanical Sunset!

Have a nice evening!

Hey everyone! Welcome back to another devlog! Today well be taking a closer look at some of our level design tools.

Lately Ive been spending time on different Houdini tools, which we implement in our level workflow. The two tools which have received the most attention recently are our house tool and our platform tool. Which both work in a similar fashion, the user of the tool simply adds boxes to the scene, adds the tool to the boxes and then uses the parameters provided by the tool.

--- Author: Lukas "Gs" Rabhi Hallner ---

Houdini Engine is a plugin which allows you to package a node network from Houdini, expose desired parameters and then bring it over to another software, in the case Unreal Engine.

Since the cooking and simulation is handled by Houdini it allows us to use a lot more solvers, functions and methods that wouldnt be possible in Unreal.

A scene comparison before and after adding the house & platform tools.

The House Tool

Our house tool creates traditional Swedish cabins by using boxes. It creates both an interior and an exterior with many parameters and settings. For example, wall color, wallpapers, doors, room sizes and seeds for randomization. Theres also support for additional details, like adding roof tiles, flower boxes, porches, balconies and antennas!

These tools can of course be used while still being in development, thats one of the great things about them. For example a level designer could build a town with the tool, and when I later on add additional details and parameters in the tool, they simply have to click a button for all houses to update.

Houdini also provides a live-sync feature between Unreal and Houdini, which allows me to see the result in the game engine while working in a different software. This helps a lot to troubleshoot and to find the causes for specific errors.

The house tool node tree.

The house tool is still a work in progress, there are many things which Id like to add in the future. Interior furnishing based on room types, ladders, additional roof details. Also more options for wear and broken houses!

One feature Im currently working with is a system to categorize rooms into types (living room, kitchen, etc) and then populate them with propriate furniture accordingly.

Fortunately, Houdini doesnt require you to model everything procedurally in Houdini. By using points with certain attributes you can tell Unreal Engine to place any model, blueprint or actor in your desired way. So by having a library of assets made in for example Blender or from asset packs, we can use those together with the tool! For example, the doors placed by the tool are fully functional blueprints made by our programmers.

Another useful technique is to add tools to other tools. What I mean with this is that you implement a tool network into another tool network, and then expose only the relevant parameters of the added underlying tool in the main tool.

If that sounded a bit confusing, heres an example.

These antennas are generated with another Houdini tool, where you can control things like height, seed and cables. Since all tools are just a set of nodes, I can package those nodes and add them to the house generator network!

Lets say the antennas had a parameter for a ground attachment, I could now choose to not expose that parameter in the House tool, since I know that theyll only be placed on roofs.

Here are some variations of possible outcomes. Its quite easy to get lost if you make the house too large and the rooms too small!

The Platform Tool

The other tool Ill talk about is our platform tool. This tool works a bit as a gray box replacer. Often while designing a level you start with gray cubes and primitive shapes to block out a level, to later replace with more detailed geometry. We utilize these shapes to generate platforms, stairs and other things. This means that we dont have to spend as much time decorating and filling out a scene, instead we can have platforms as a base and then add more detail to them.

Working with procedural tools also means that I can add details, assets and other things to the platforms and then Fredrik can simply press recook and everything will be updated, without having to redo a single thing.

With Houdini Engine you also have the possibility to create collisions, which is very useful for this tool. Lastly, the following picture is a small breakdown of how the stairs are created in this tool. The walls, panels, railings are created in a similar fashion of breaking something out, modifying it and merging it back in.

This concludes this weeks devlog! Hopefully youve found it somewhat interesting to get a bit of insight to our workflow with tools! Until next time!

Hello! Hope you all are doing fine!

Welcome to another weekly devlog: this time about sound redesign of our current red fusebox!

Back in May we exhibited a demo of our game at the Nordsken convention in Skellefte. From there, we managed to gather some helpful data on several aspects of the game both on things that worked, and on things that could be improved. Touching upon the latter, one such thing is the tricky part of conveying information to the player regarding the state of an active puzzle (or part of it). An example of this, and what we will talk about in this devlog, is the fusebox.

--- Author: Pontus "Sparkles" Andersson ---

At the moment our fusebox doesnt have that many sounds connected to it which doesnt aid a players understanding of whats going on and what they have to do. Thus, the aim of this redesign is both to improve on the feedback given to the player, as well as creating a more unified sound experience when interacting with the fusebox.

Jumping into it, we first need to break down the fusebox into its individual components:

We have the breaker, which is essentially a handle or lever with two settings: engaged or disengaged. The state of the breaker dictates wether the fusebox is connected or disconneted to an external grid.

We also have the main unit: the box containing a slot and lock for the fuse. The lock is meant to keep the fuse in place, and is required to be in the engaged (locked) position in order for the unit to work.

And last we have the fuse(s) which we slot into the main unit. These currently have two states: broken and healthy, with the latter state, surprise surprise, being the one you need.

These three component dictates the overall state of the fusebox, meaning, all three of them needs to be in the good state at the same time in order for the fusebox to function.

Here is a visual representation of the components and the current sounds attached to them:

The name of the sound gives a clue of when the sound is played, and the color correlates to whether that state is good (green) or bad (red).

As seen in the picture, there arent many sounds that would help a player understand whats going on. The picture even looks empty. Additionally, the lock and breaker share the same sound effect for both of their states, and the fuse sounds the same regardless if its healthy or broken. Hopefully, these are some of things we can address.

This is the visual representation of the components and their sounds after the redesign:

Key changes includes:

the lock and breaker receiving their own interact sounds (and with distinguishable state variations).

the fuse sounding differently depending on its state, as well as if the fusebox is connected to an external grid or not.

the addition of an overall state sound.

Ive rendered a demonstration video which includes the before- and after sounds. Ill attach it below so you can have a listen yourself!

Link to video

That concludes this weeks devlog! P terseende!

Hiya folks! TGIF. For the next 15 or so minutes I thought Id share with you the gist of our current level design process. Or should that be environment design? The scene Ill put together isnt very thought-out from a level design perspective, but mostly intended to look good and carry that Mech-Sun feeling.

We currently work Dallas style, meaning I do most everything related to putting together a level from blocking out the basic route, to ensuring it looks good and pleasantly cluttered; to optimization, level streaming, and collision. It can be a fair bit of workload, but also fairly liberating.

--- Author: Fredrik "Olaxan" Lind ---

Blackest Blackness

This is a completely empty scene in Unreal. Absent of light, skybox, post processing, birds, clouds, and joy. Well throw a boring directional light in there and call it a day. Ill turn off auto-exposure too (using an unbound post process volume), so we can have a properly dark feeling.

In the darkness we can make out some shapes. These are created using Cube Grid, an UE5 tool that makes whiteboxing a breeze. The tool allows for the creation of meshes using a resizable voxel-esque cube tool, like Minecraft or Magickavoxel.

Suddenly these shapes look all dope. Weve enabled RTX. No, in reality these shapes have gone through Houdini Engine, a powerful procedural modelling tool which allows us to generate advanced geometry from inputs in Unreal. It gives us a fantastic starting point when designing a level, since we get to the 80%-mark much quicker although its not sufficient on its own.

This is a good time to add some lights. Our real light blueprints come equipped with a reasonable light temperature and brightness. These light posts are 20W sodium vapour bulbs. They include moths, because moths add realism to everything.

Lets anchor the layout in place with some rusty junk, and add an open balcony at the side of the house for the player to enter through.

Actually, lets add a little platform for the robotic inhabitant to watch the Mechanical Sunset from.

(using our dynamic catwalk tool which automatically ensures theres a railing where apt)

Probably a good time to add some clutter. I think its important to add some detail quite early in the design process, to clearly indicate which area is meant for what purpose. You have to make some decisions and imagine yourself living there. Does any particular corner vibe with you as a cozy breakfast nook? Then of course thats where you should put the table.

Again using Houdini Engine, we can run a physics simulation for a couple of cables hanging from the central pylon. The simulation is baked into a static mesh, which allows us to have incredibly convoluted cables produced very cheap. Ive also added some supports to make the whole thing seem more dream-like and islandesque.

Finally we should add a friend. Had this been a scene for inclusion in Mechanical Sunset, this would be an NPC with a dialogue tree and living behaviour. It would have used the furniture, leaned against the railing, and commented on your progress. For now, its a just a skeletal mesh.

Were kindly but firmly waved off by Tutis, who has to finish moving house.

A few notes before signing off for the weekend! This is not a good tutorial, since it relies heavily on tools weve already produced. See it as a fun look behind the scenes.

For a scene meant to be included in the game, more careful attention to detail would be necessary. Player navigation was not considered but playing the level during the early block-out stages would have made it possible to discern scale and manouverability at that point.

The lighting could use more work, and the placement of carefully considered shadow-casting lights could produce a dramatic effect.

Im thrilled to be working on a game where I can really make use of my love for strange yet cozy environments. (Thanks, Sven Nordqvist.) Hope this could serve as inspiration if nothing else!

Me and the Gumlin(s) wish you a pleasant weekend (where applicable)!

Another week another devlog! This week has so far been a bit rough for myself as I have been struggling with the subject at hand, Inverse Kinematics(IK)!

This and last week I have been getting into IK which stands for Inverse Kinematics. IK in this case refers to the calculating of skeleton joint parameters(location, rotation) needed for placing a joint in the right place depending on the skeletons surroundings(for example a hand and arm). That said, the mathematics behind IK can get very confusing. Luckily, using Unreal Engine, we dont really have to care about what is going on inside the engine however, some difficulties remained. The IK built in to unreal engine is very simple, which is very good thing for most use cases as the threshold for who can use it becomes lower, in my case however this lead me down a rabbit hole.

--- Author: SImon "Vito" Gustavsson ---

Using IK in unreal is as said very simple as you only really have to give the location where you want the joint to target and which other joints should also follow. In this case it would be where I would want the hand to target a ladder step and all the joints leading to it from the shoulder, which is simple enough. This is where the problem arose: there was no interpolation between the positions, so whenever I wanted the hand to switch to the next rung, it just teleported there.

Video titled "Before"

So this lead to me having to come up with a solution for the interpolation and I of course googled the problem, but I couldnt really find a solution online so my brain was going to have to work a bit harder. After some consideration I came up with that what I wanted to do was go from the old location to the new location, and I knew there existed blend nodes for general animations, so my first test came out to try to blend between old locations that had been used and interpolate between the new location. Here I used a node called blend node by bool which in hindsight was the most foolish idea I have ever had, however this function had a blend time which made it enticing for me to try out. This solution I did not like as there were many variables that needed blending and the animation code became too big and unreadable.

However, it also had a grand flaw: while the blending worked brilliantly, it would always lag behind. This is because when using Blend Node by Bool it interpolates between the position given at the start of the blend. During this time, the solver does not update the target position, causing it to lag behind by however long the blend time is. This made me also understand why unreal does not have a built-in blend for IK. This lead me to try out all different kinds of blending between animations and I found one I really liked that was about what I was imagining, just a simple interpolation with an alpha variable that seemed to work fine. This is also where I found out you could save previous poses from you character(which I should have realized earlier) so I did not have to use so many variables for using the damned bool node which also fixed my problem with blend times.

Video titled "After"

All said and done I still do not like looking at how small the solution became in the end, it makes me feel stupid for making such a complex solution to begin with. Nonetheless I still have some minor tweaks to figure out, like right now it jitters a bit too much while climbing and also some IK for going against walls and for the legs. but now I am going to take a little break from doing this and test out some new smart objects for NPCs in unreal(maybe a topic for another devlog :P)

That is it for this week, hope yall are doing well! Au Revoir!

Howdy! Hope youre ready for the weekend (where applicable) I am! But before that, stay tuned for a devlog which, this week, will be about concept art.

Concept art is crucial for getting an idea across from my mind to Gs modelling prowess, as well as for planning regions and level design ahead; so that the rough layout of various levels will be known in advance, before getting stuck in with Unreal.

--- Author: Fredrik "Olaxan" Lind ---

We need concept art for a lot of things, actually; from robots, to regions, to buildings and general atmosphere.

Heres three recent pieces showcasing a different architectural vibe than the red cottages were used to seeing.

We want these buildings to look like theyre built from scrap metal, which makes sense for our setting while also feeling a bit familiar, like an RV, or a shoddy house extension one might have seen.

Our game should feel cozy where robots live, so concept art must feature some lived-in detailing: like coffee pots, garbage, or tablecloths.

These kinds of concept art documents are meant to convey atmosphere more than serve as a 1:1 modelling blueprint: to provide a reference point for Gs to work towards, while still being able to take creative liberties and improvise.

Sometimes theres a need for concept art thats a bit more technical:

Previously theres been images of our many variations of robot concept art this one digital.

This one painted with pen and aquarelle:

But drawing robots is difficult often you cheat with perspective and hide imperfections with an overabundance of details and overlap.

This becomes very hard to follow when handed over to a 3D artist!

Thus this, an attempt to boil down the essence of how I tend to draw robots into a simpler, easier method to follow. A sort of robot tutorial.

A different kind of concept art is the region overview, as described above.

Level design can be quite fun, and definitely the subject of a future devlog, but it helps a lot to have a reference so why not draw one?

Heres a rough overview of Newtram, a previously unseen area of the game.

with a continuation down into Lower Newtram.

Concept art is often done digitally. I tend to work more quickly with pens on paper rather than digital, where you can fuss over infinite detail.

The process for completing a piece of concept art in aquarelle which, mind you, is MUCH slower than its digital counterpart, but more fun to do is as follows:

Sketch out the basic idea in pencil.

Fill in contours using ink pens I use Micron fineliners.

To preserve white space, I use liquid latex or masking fluid to block out things like reflections and pupils.

Before doing a pass of aquarelle and removing the masking fluid which is GREAT FUN!

Those are a few of the uses we have for concept art when developing Mechanical Sunset. Theres more to be said, but as of posting this particular message its officially weekend!

Until next time!

Hello everyone! It's time for another weekly devlog update, this time about footsteps and sound!

But first, we know, we missed a week. We had our hopes up for our little Gumlin. We really did. But, unfortunately, it had found its way into a big supply of hallonbtar, devoured them, and then dozed of for the rest of the week. Hopefully it has learned a lesson and next time it will actually deliver on that blog post. But let's return to the real update!

--- Author: Pontus "Sparkles" Andersson ---

SO, footstep sounds, something that can be very important for a video game depending on the genre and usage area. In many FPS games they give spatial cues about other characters location, which in many cases is a tactical advantage. Another example, as in our case, is that particular footstep sound can help with establishing the physical presence of the player's character in the game world. This is done by accounting for two factors: the physical characteristics of the character itself, as well as the physical material that is stepped upon.

We do already have footstep sounds in our game, but these were designed with a human character in mind. Since the player is not human anymore, there is an opportunity to re-designed them to match that fact. To have some inspiration to work towards, I imagined our character to sound something in the lines of a worker robot in a cargo bay -like a more flexible fork lift of sorts. In other words, heavy and clunky.

I collected a bunch of different sounds that could fit that description and started layering them and just trying stuff out. Here is and example of what a typical footstep event could look like in FMOD:

This particular event is supposed to be played when the player steps on a metallic surface. Currently there are 4 sound variations depending on the surface type: metal, wood, a specific catwalk sound, and some textile (carpet). I'll add some examples for you to listen to.Metal.mp3 Wood.mp3 Catwalk.mp3

Ive also made it possible to mix and match, or blend, between materials. For example, should a carpet lie on top of a wooden floor (as they are known to do), we could blend in some of the textile footstep and lower the volume of the wooden one, like this:Wood_textile.mp3

In fact, it is extremely easy to blend any two (or more) material sounds together thanks to the implementation in FMOD. Currently every material and its corresponding sounds exists in a matrix view, and should you like to mix any two of them, you simply add one materials main event sound to the other one. it might become easier to understand with an image:

Each column corresponds to an unique surface type in the Unreal Editor. We can see that the Wood type only play the wood event, but the WoodTextile plays both the wood and textile event. From here its also possible to tweak some pitching or play around with an equalizer to further customize the blend (or single material sound for that matter).

Looking Further Ahead

Its worth mentioning that this is the first iteration of new footstep sounds and there are some stuff I would like to improve. For example, there isnt that much that suggests that the footsteps belongs to a robotic being. I would like to add some hydraulic whirrs or clinks that should anticipate the characters step. That could really spice things up! However, that is something that will have to be put on hold for now as these new sound are good enough for the time being. I believe this is a step in the right direction (no pun intended).Oh, one last thing! Gamescom is around the corner and were super exicted! While we are not attending physically ourselves this year, Mind Detonator will be there and spread our word for us! That being said, we have spent some time touching up previous pitch material and other stuff that is good to have in these scenarios.

Thats it for this time! Thanks for reading and should you have any questions or comments, were available on our Discord.

A week fraught with hardship and frustration. Battling deadlines and the very essence of Unreal Engine 5 itself

But now, devlog time!

--- Author: Carl "Floof" Appelkvist ---

Hello everyone, this is a continuation of our new weekly devlogs. This week its my turn at the helm. My main focus this week has been fixing some pesky packaging issues with our game.

We use a couple of plugins to aid in the production of Mechanical Sunset. We use FMOD for audio, Houdini for the creation of sick tools like our ivy tool and snow tool (check last weeks devlog) but none has been as problematic as Geometry Scripting. We use Geometry Scripting for our procedural machine walls and its an excellent plugin, when in the editor.

Now, the hard part comes when trying to package the game for runtime (i.e taking the project files and turning them into the standalone game) as editor only plugins are not supported, this throws an error when packaging the game. So I thought, naively, What if I just remove the plugin? It shouldnt need to depend on an editor-only plugin when running. And then the pain started, errors left and right. Enabling it again didnt help and trying to make the base classes editor only really messed it up!

The Unreal Engine documentation really lacks in this department so I turned to Discord, and thanks to an amazing user over at the Unreal Engine Discord server we got it working, it now builds and runs smoothly out of editor. And the worst part was that it was a relatively simple fix, IF YOU KNEW TO DO THAT that is. And now.. You will know too! (This will get technical).

Emotional support Gumlin for the ride.

First of all: https://unrealcommunity.wiki/creating-an-editor-module-x64nt5g3 this is a great tutorial for making an editor module but there are some key things that arent in this tutorial that ill share with you.

Begin by checking your .uproject file, i use Notepad++ to edit its plugin and dependency settings:

Make sure your plugins are enabled, even if they are editor only.

[code] {

"Name": "GeometryScripting",

"Enabled": true

}

[/code]

Then go back to the top, here youll find your base module with the type Runtime, for us its Maskinspelet. Now youll have to make a similar module below, the key differences are changing the name, the type to Editor and i changed the loading phase:

[code]"Modules: [

{

"Name": "Maskinspelet",

"Type": "Runtime",

"LoadingPhase": "Default"

},

{

"Name": "MaskinspeletEditor",

"Type": "Editor",

"LoadingPhase": "PostEngineInit"

}

],

[/code]

I also made an error here at first, in your .uproject file you might find the "AdditionalDependencies" line. Kill it with fire. Theres no reason to declare your dependencies here, its something reserved for the .Build.cs script, of which you create in the tutorial linked above. Having the "AdditionalDependencies" line AND declaring your dependencies in the build script WILL break it, so dont do it. This was the main problem I encountered, and it was the problem the users of the Unreal Engine Discord helped me fix.

Also, when editing your new .Build.cs file, make sure to but your third party, editor only and experimental plugins (along with UnrealEd) in the private section:

[code]PrivateDependencyModuleNames.AddRange(new string[] {"UnrealEd", "GeometryScriptingEditor" });

[/code]

And last but definitely not least, the thing that brought it all together, addthe following to all your .build.cs scripts, even the runtime module. This bit of code was the thing that finally resolved the case.:

[code]if (Target.Configuration == UnrealTargetConfiguration.DebugGame

|| Target.Configuration == UnrealTargetConfiguration.Debug)

{

bUseUnity = false;

}

[/code]

And to summarize

[olist][/olist]

Whew, and all that had to be completed by this week. We promised a publisher that well have it up on Steam before today. Also, this is my and Lukas "Gs" Rabhi Hallner's last day of work before our vacation!

Taking some time off will be great and the rest of the Gumlin team will be hard at work to keep updates regular and prepare our game for Gamescom!

(Editors note, we're not currently on vacation, this is just a re-upload of an old dev log to our Steam community page)

Hello people! I'm sorry for missing last weeks devlog this week I got reminded of it

Right now I am working on creating destructible meshes with the new Chaos destruction tool that has been added to Unreal Engine 5.

The idea behind having destructible meshes in this case is having throwable and fragile items in the world (maybe a coffee mug, or a window) which you can break. It adds a lot of realism and interaction to a scene!

It would also be possible to have larger structures crumble for cinematic effect.

Chaos is so cool and satisfying when you get it to work the keyword here being "when".

There are dozens of settings for each component in the item which need to be tweaked in order for it to feel even slightly realistic.

The Chaos destructible meshes are built using something called a "geometry collection" basically a collection of meshes.

You then take a mesh and create a geometry collection from it by fracturing it into smaller pieces.

In order to make the mesh not explode into a million small pieces upon spawning, there are parameters settings for the amount of force a single fragment can withstand before breaking away from the larger mesh.

There are also several parameters here for what is called "clusters". A cluster is a smaller piece of a fracture fragment, which has its own thresholds for how much punishment it needs to be put under in order to break into even smaller pieces.

In theory you can create a mesh that can be granulated an unlimited amount of times but that isn't very good for performance, one might suppose.

Now the fun part, actually destroying them!

To destroy a mesh we need to create some kind of force to make the object buckle under the pressure. There are several types of force you can apply.

One way is to create a "force field." At the moment I have only tried a couple of them, but here are two examples:

Linear Force (explosionesque)

External Strain

The explosion is really cool, but I think the straining is even more impressive! It's so well done, and makes me feel like we have come a long way regarding physics in video games.

What impressed me was how the strain could get multiple outcomes depending on how the mesh crumbled under its own weight. Sometimes the middle part got so strained that it collapsed the other way (as seen in the two comparison videos).

I also accidentally scaled it and got some fun weird bugs but it still looks cool, at the very least!

We will see how many of these will find their way into the final game, but at least you can expect some degree of destructibility in Mechanical Sunset!

[i]Simon Gustavsson

Programmer at Gumlin Games[/i]

Hey everyone! Welcome to our first devlog plublished on Steam! This is actually devlog #15, so if you want to read our previous ones, join our community discord! https://discord.gg/9dGM4GT86A

In this weekly devlog we talk about our level design tools and how we make and use them!

Lately Ive been spending time on different Houdini tools, which we implement in our level workflow. The two tools which have received the most attention recently are our house tool and our platform tool. Which both work in a similar fashion, the user of the tool simply adds boxes to the scene, adds the tool to the boxes and then uses the parameters provided by the tool.

Houdini Engine is a plugin which allows you to package a node network from Houdini, expose desired parameters and then bring it over to another software, in the case Unreal Engine.

Since the cooking and simulation is handled by Houdini it allows us to use a lot more solvers, functions and methods that wouldnt be possible in Unreal.

A scene comparison before and after adding the house & platform tools

The House Tool

Our house tool creates traditional Swedish cabins by using boxes. It creates both an interior and an exterior with many parameters and settings. For example, wall color, wallpapers, doors, room sizes and seeds for randomization. There's also support for additional details, like adding roof tiles, flower boxes, porches, balconies and antennas!

These tools can of course be used while still being in development, thats one of the great things about them. For example a level designer could build a town with the tool, and when I later on add additional details and parameters in the tool, they simply have to click a button for all houses to update.

Houdini also provides a live-sync feature between Unreal and Houdini, which allows me to see the result in the game engine while working in a different software. This helps a lot to troubleshoot and to find the causes for specific errors.

The house tool node tree

The house tool is still a work in progress, there are many things which Id like to add in the future. Interior furnishing based on room types, ladders, additional roof details. Also more options for wear and broken houses!

One feature Im currently working with is a system to categorize rooms into types (living room, kitchen, etc) and then populate them with propriate furniture accordingly.

Fortunately, Houdini doesnt require you to model everything procedurally in Houdini. By using points with certain attributes you can tell Unreal Engine to place any model, blueprint or actor in your desired way. So by having a library of assets made in for example Blender or from asset packs, we can use those together with the tool!

For example, the doors placed by the tool are fully functional blueprints made by our programmers.

Another useful technique is to add tools to other tools. What I mean with this is that you implement a tool network into another tool network, and then expose only the relevant parameters of the added underlying tool in the main tool.

If that sounded a bit confusing, heres an example.

These antennas are generated with another Houdini tool, where you can control things like height, seed and cables. Since all tools are just a set of nodes, I can package those nodes and add them to the house generator network!

Lets say the antennas had a parameter for a ground attachment, I could now choose to not expose that parameter in the House tool, since I know that theyll only be placed on roofs.

Here are some variations of possible outcomes. Its quite easy to get lost if you make the house too large and the rooms too small!

The Platform Tool

The other tool Ill talk about is our platform tool. This tool works a bit as a gray box replacer. Often while designing a level you start with gray cubes and primitive shapes to block out a level, to later replace with more detailed geometry. We utilize these shapes to generate platforms, stairs and other things. This means that we dont have to spend as much time decorating and filling out a scene, instead we can have platforms as a base and then add more detail to them.

Working with procedural tools also means that I can add details, assets and other things to the platforms and then our level designer can simply press recook and everything will be updated, without having to redo a single thing.

With Houdini Engine you also have the possibility to create collisions, which is very useful for this tool. Lastly, the following picture is a small breakdown of how the stairs are created in this tool. The walls, panels, railings are created in a similar fashion of breaking something out, modifying it and merging it back in.

This concludes this week's devlog! Hopefully youve found it somewhat interesting to get a bit of insight to our workflow with tools! Until next time!

[ 6368 ]

[ 5878 ]

[ 1991 ]

[ 1943 ]

[ 988 ]